ChatGPT can do quite a good job of generating routine documents. It is very good at generating SaaS legal agreements that look like the many boilerplate agreements scattered across the Internet. Below is an example prompt and the outputs from ContractGPT

.

You can run this example yourself by signing up for a ChatGPT account with OpenAI. There have been many startups that have done this sort of work in the past, but they will be quickly eclipsed by the power of large language models like GPT4 (already available to some people).

Initial Prompt

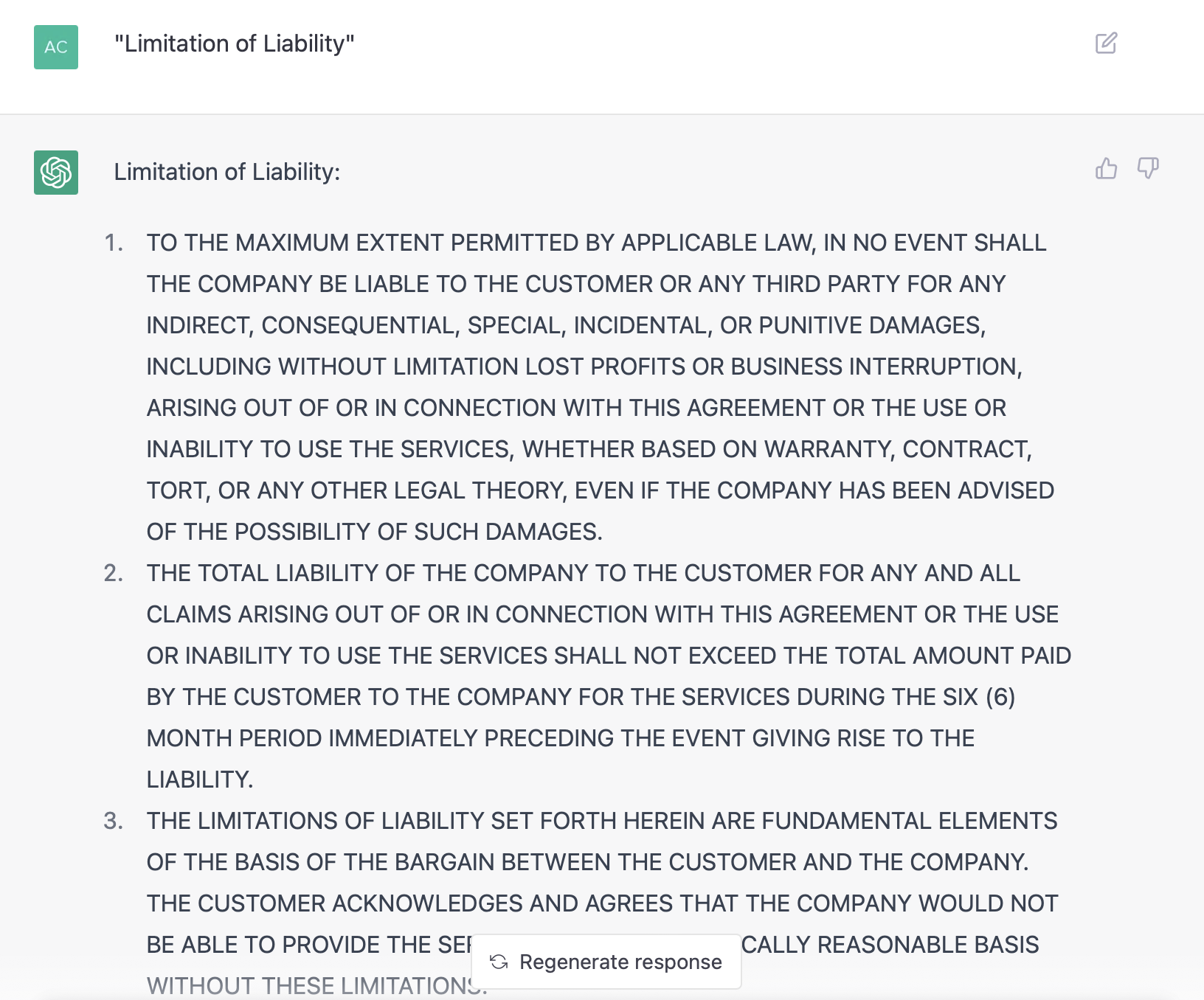

Responses

Results

Although quite terrible at answering Canadian legal questions, ChatGPT is quite good at making contracts of adhesion. They're obviously generic, but for many companies, they're already using templates they copied off the Internet (probably without much understanding). So it's probably better to have a slightly GPT-customized version, which with a bit of prompt engineering could probably do a better job.

In my example, ChatGPT has chosen less oppressive terms than many SaaS companies (particularly in the part about not requiring a 30 day [or more] notice period, which is a common tactic for auto-renewals). It's chosen a relatively reasonably number of months in its attempt to limit liability. Overall, this system can produce quite a reasonable template that could be modified by a somewhat skilled lawyer to produce contracts at a very low price.

Compared to some of the nonsense ChatGPT churns out, it seems like there have been so many examples of these SaaS agreements ingested as part of the training data set that it's quite good at this task.

We are probably not far off of having GPT-like systems produce very good base agreements to be customized as needed. Of course, the system itself can handle some of the customization, but it probably will take a while until nuanced, skilled drafting is replace by large language models. Then again, very few businesses have access to such counsel, and is it worth the cost? GPT-generated form agreements are very, very cheap. It would cost pennies to generate a slightly customized contract, and a more fine-tuned model could do an even better job for many kinds of standard agreements.

The Prompt

Probably a bit of prompt engineering

(the emerging discipline of writing instructions for large language models) could do better than my first pass at ContractGPT. It's written below for ease of copying.

Pretend that you are writing the terms of service for a software service that provides customer support management services. What that means is that the software is for managing support tickets and responding to them. The customer is paying a subscription to the company for use of the software suite. Access is provided entirely online. When you write the terms of service, write them in the style of modern American SaaS terms of service agreements. I will tell you which sections to write, and you will respond by providing the section of the agreement that you are writing. Each time I will give you a prompt for which section and you will produce that section. We will start after I gave the first instruction, so your initial response will be to just confirm these instructions by writing back "Yes, I understand the task".

Fairness

Is there an obligation of the makers of large language models, like OpenAI, to consider contractual fairness? If LLMs (like ChatGPT) are trained on one-sided contracts, and then spits out one-sided contracts to users, LLMs may not be doing much of a favour to consumers. There'll be some interesting ethical issues coming up in this realm. Although probably not worse than the status quo of having a commercial lawyer draft up an agreement (which are often one-sided but not always), ChatGPT and similar systems pose a risk of creating systemic issues with fairness in the world of contracting by reproducing current systems into the future (rather than permitting more rapid change that lawyers might instigate). The fewer humans are in the loop, perhaps questions about fairness

may take on a different meaning or lose meaning. A system that is synthesizing new contracts based on a library of old ones isn't taking a position on anything, and yet the results will have significant impacts on the contracting humans who use the agreements it generates.