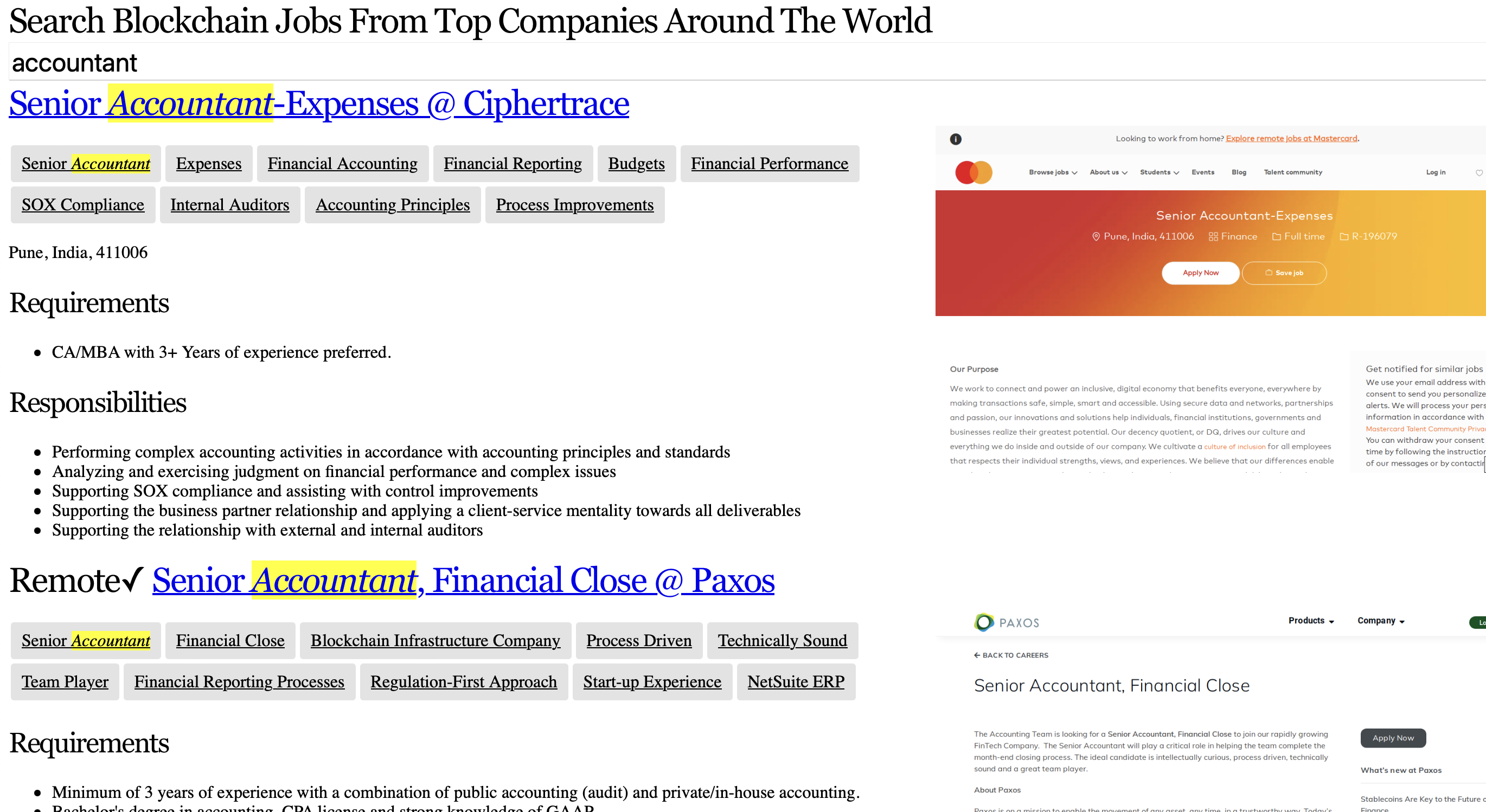

My latest experiment with search is a search engine for jobs in blockchain: https://jobs.cameronhuff.com. It's a crawler that looks through a list of about 80 blockchain company's career pages and finds all the job listing pages. Then the pages are run through a filtering process and on to ChatGPT. The ChatGPT API is used to extract the structured data about the job and generate new data like tags. The AI-summarized job data is then piped into a Meilisearch instance to make it searchable.

1000 Blockchain Jobs

The system I've made for jobs has around 1000 AI-extracted jobs (see below for the explanation of how that works). I sampled nearly 100 companies, going for larger ones that I know of. I also included a few regulators that do cryptocurrency-related files. This can always be improved by adding new sites. Then the sites are automatically crawled and the data extracted.

Why ChatGPT? How Does It Work?

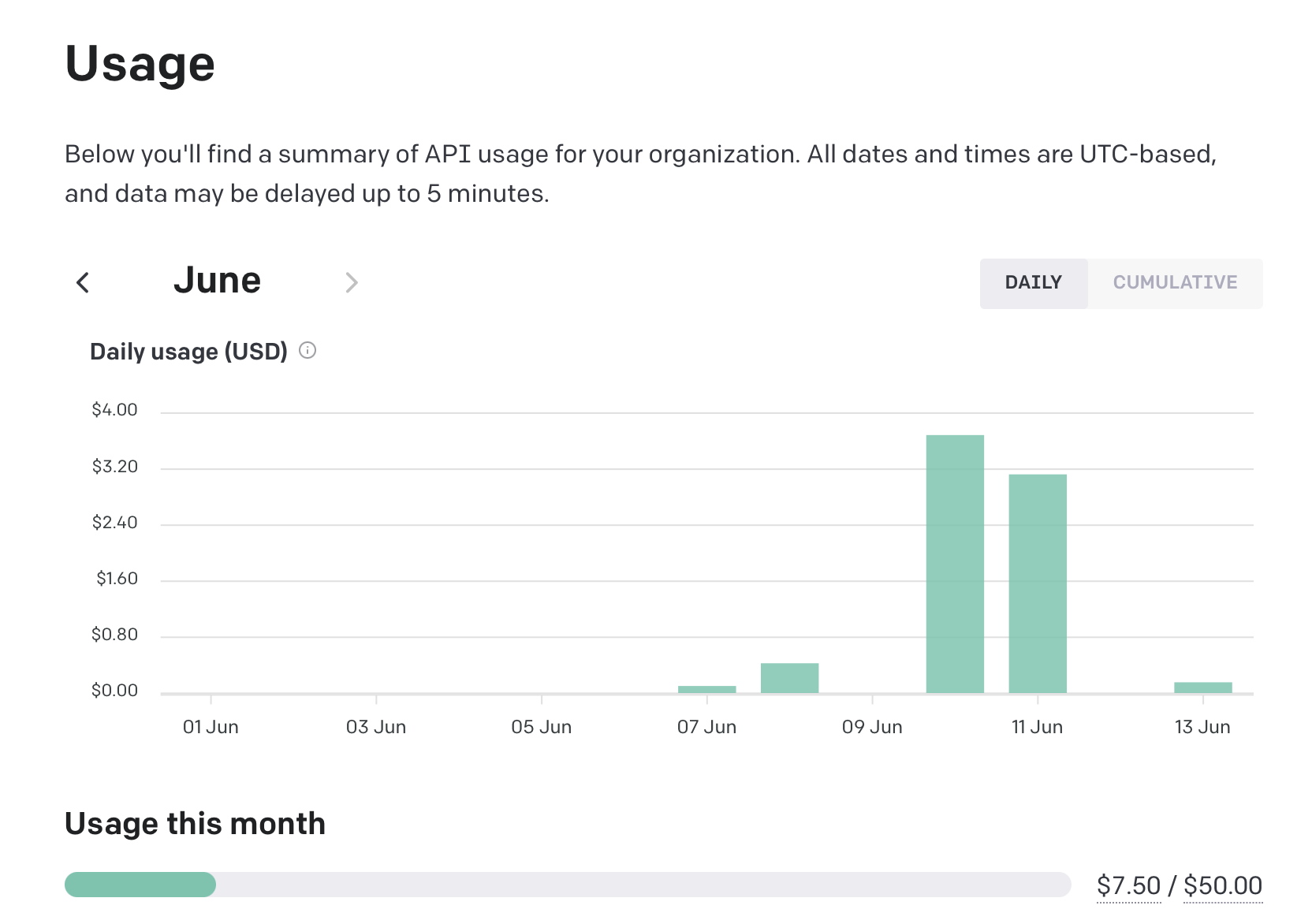

ChatGPT is used for this project because it's quite good at data extraction. Google's Vertex AI system, while currently free, was not up to the task. ChatGPT isn't free, but it's quite affordable. Testing out this idea and processing 2000ish web pages has cost me $10. The system extracted around 1000 jobs, and did the job in a way that might be better than what a person could do. It also did this (tedious) job much faster than a human could.

ChatGPT isn't just cheap, in this application it's essential. The ChatGPT API allows data from web crawling to be used in a way that normal scraping can't because the AI system is actually recognizing what is on the page to make a decision as to whether it's a job listing or some other kind of page (like a contact page, a listing page with links to specific jobs, or any number of other web pages that don't contain proper job listing information). While a human can easily tell the difference between page types, this is a task that typical programs struggle with. ChatGPT, being based on a giant transformer model, has the ability to understand context and make a judgement

call. This is millions of times more intensive than typical programming methods, but the results are great.

Because ChatGPT isn't free, there's a few checks on the data to see if it might be a job listing page. The most useful signal is to check whether various job-related words (in English and French) are on the page. If so, it goes on to the next stage in the pipeline, and ultimately on to ChatGPT. This method reduces the cost by at least 80%. Probably most similar programs running on web crawler information would need the same steps to save on ChatGPT fees (which are probably subsidized right now by OpenAI, the makers of ChatGPT).

Screenshots

Each job listing includes a screenshot and link to the original job posting. The screenshots were generated with Playwright, which also extracts the text from each webpage. Playwright is a library for controlling web browsers programatically. In this system, I'm using it in headless mode. It's connected to a larger system I have for indexing websites, so the search engine part of this system was already written and running.

Errors From Using ChatGPT?

There are probably some errors introduced by ChatGPT. It isn't perfect. I tried to err on the side of excluding malformed data rather than including it, but sometimes it doesn't extract the page contents properly.

Future Directions

It might be possible to have someone upload their resume and then have jobs be presented based on whether their experience is sufficient to get the job. I'm not sure how reliable this would be, because in my limited tests, there are a lot of ways to write what the requirement is. ChatGPT has a hard time understanding this sort of nuance. Even job applicants can struggle to know if they meet job requirements for specialized jobs.

Uploading a resume in order to search for keywords would probably work well.

Having new keywords be displayed based on keywords typed may work (i.e. keyword suggestion). This would only work with ChatGPT coming up with the keywords because regular synonym dictionaries don't do a good job of capturing job-related words.

Browsing for jobs is another obvious way to make this more useful.

Having facets

that work as filters may also be useful. Facets are the term for searchable properties that can be used as filters. For example, filtering based on job locations or whether it is remote-friendly.

Having the search be informed on data about the company, rather than the job, might also be a useful search method. I experimented with using crawled pages to come up with the company summaries, but it made the whole system a bit too complex because then the main website needs to be indexed along with the career pages. They would need to be done separately because the system is only crawling 1 or 2 links away from the main job listing, as a means of speeding up the crawling process and reducing the number of pages that need to be processed by ChatGPT (beacuse of the cost).

Interested?

Feel free to send me an email: addison@cameronhuff.com.