Many lawyers and businesses have been discussing the idea of ChatGPT and similar LLMs taking over their work. There's a lot of promise about revolutionizing the way people work. But in reality, these tools are a very long away from replacing humans. The reason for this is that they're not good at law, they fail to properly understand questions, they fail to identify gaps in the facts that require follow up questions, and they're susceptible to making up law that doesn't exist. Below are a few examples that illustrate these problems.

Imagining What A Case Is About

ChatGPT will happily make up cases, complete with imaginary citations. Sometimes it cites a real case but makes up what it's about, like in the example below.

One interesting thing about the above case is that ChatGPT used to give a different answer to this question. When I tried the same question a month ago, it gave a wrong citation for the same case name. I think someone in quality control has edited the answer to use the right citation, or perhaps they've ingested more Canadian case law or other sources to make the answers better.

Imagining Cases And Citations

Some ChatGPT queries result in made up cases with made up citations. Everything is wrong about the answer but it looks right! It would fool someone that doesn't look up the case and find out that it stands for a different principle. The case below does exist, but it's actually from 1941 and is about liability for a car that hit someone while a CP Rail employee was delivering a key. What ChatGPT says is false, and the citation ("[1992] 2 S.C.R. 311") doesn't exist. But it looks real!

Incorrect Application Of Employment Laws

ChatGPT makes a mistake in explaining how employment laws work. It, logically, assumes that the Canada Labour Code applies to federally incorporated companies and the provincial employment laws apply to provincial corporations. This is not true. The Canada Labour Code is for certain federally regulated businesses like railways, and whether or not it applies is not based on the type of company. Federal and provincial incorporations are the same for employment purposes.

Imagining Provincial Laws

There is a law in Ontario for consumer protection, but it doesn't mandate a 36% cap on non-government loans

like ChatGPT confidently claims. I don't know where it got that from, but it seems some American jurisdictions have a law like this. ChatGPT's answer is wrong because it makes up a legal rule that doesn't exist.

Failing To Understand Provincial vs. Federal Jurisdiction + Imagining Laws That Don't Exist

Here, ChatGPT imagines a federal securities law called the Securities Act

, complete with a made up RSC citation. There is no such law, and the Supreme Court has twice rejected even the idea of the federal government making such a law. ChatGPT also imagines that the CSA enforces this law, which is not true. More subtly, it fails to understand that the question isn't about securities, it would be about federal registration with FINTRAC. Instead, ChatGPT assumes this is about securities (which is provincially regulated). The argument about regulatory overreach

wouldn't be one that any reasonable lawyer would present about anti-money laundering laws. I think it'll be a while until litigators are replaced by ChatGPT.

Question: An Ontario-licensed lawyer stands up in court and gives a two minute speech about why his client has the right to operate a cryptocurrency dealer business without any federal registration. What does he say?

As an AI language model, I cannot provide legal advice or opinion. However, in general, a lawyer arguing in court that their client has the right to operate a cryptocurrency dealer business without federal registration may present arguments based on the following:The Canadian Constitution and Charter of Rights and Freedoms: The lawyer may argue that the client's right to operate a business is protected under Section 7 of the Charter, which guarantees the right to life, liberty, and security of the person, and Section 2, which guarantees freedom of expression and association. The lawyer may argue that federal registration requirements for cryptocurrency dealers infringe upon these rights.

Lack of federal jurisdiction: The lawyer may argue that the federal government does not have jurisdiction over the client's business, as cryptocurrency may not fall under the definition of a "security" under federal law, and therefore the federal registration requirement does not apply.

Regulatory overreach: The lawyer may argue that the federal registration requirement for cryptocurrency dealers is overly broad and amounts to regulatory overreach. The lawyer may argue that the requirement is not necessary to protect consumers or prevent money laundering, and that the provincial government is better equipped to regulate the client's business.

It is important to note that the specific arguments a lawyer may use would depend on the facts of the case and the applicable laws and regulations.

Question: What federal law defines what a security is?

The federal law in Canada that defines what a security is the "Securities Act" (R.S.C., 1985, c. S-4). The Act provides a broad definition of a security, which includes shares, bonds, debentures, and other instruments commonly understood to be securities. The Act also includes in the definition of a security certain investment contracts, which can include transactions involving cryptocurrencies or other digital assets. The Act is administered and enforced by the Canadian Securities Administrators (CSA), which is a council of securities regulators from each province and territory.

Failing To Identify Conflicts

ChatGPT isn't programmed with the nuance of how professionals actually work. Although it's a bit unfair to give a question to ChatGPT that involves these rules, I think it's a good test of the idea that these things can replace lawyers. In the question below, the AI happily starts in on answering what seems like the problems, but it misses the fact that these people very likely have a significant conflict of interest between them and the lawyer can't help all four of them at once without addressing the conflict first. Starting in on the problems isn't the right solution.

Question: Two friends start a business together. After three years they decide that they should incorporate the business. One of the friends hasn't paid their taxes in years. The other friend doesn't know if they are allowed to start a business since they only have PR status. The business and friends are both in Ontario. The money will come from a third friend who they've known for years and wants to get in on the great opportunity they've been working on, which is a new app for sharing photos of your cat. The friends call it "The CatBook". It'll be the next big thing and maybe someone will make a movie about it. But then, a fourth friend who they'd been paying a bit of money to says that he is quitting to start DogBook, and he says he doesn't care what they think about DogBook, he's going to get rich. All four of these people come to you, together, and ask for your advice. What is the first thing that you, the startup lawyer, tell them?

As a startup lawyer, the first thing I would tell them is that they need to address several important issues before incorporating their business and launching "The CatBook" app.Tax issues: It's essential that the friend who hasn't paid taxes gets their tax situation sorted out before incorporating the business. Failure to do so could result in legal and financial consequences that could harm the business and the other founders.

Immigration status: As the friend only has PR status, they need to ensure they have the necessary permits to work in Canada and be a director of the company. It's important to ensure compliance with immigration laws to avoid future legal issues.

Incorporation: It's recommended that they incorporate their business as a separate legal entity, which will help protect their personal assets and limit their liability. This would also require drafting a shareholders' agreement that outlines each founder's rights, responsibilities, and ownership stake in the company.

Intellectual property: Before launching their app, they need to ensure that they have proper trademark and copyright protections in place for their brand name, logo, and app design.

Competition: They should also research whether their app idea infringes on any existing intellectual property or competes with similar apps in the market.

Overall, starting a business involves numerous legal considerations and it's essential that they consult with a lawyer to address these issues before incorporating the business and launching their app. Additionally, they may also need to address the issue of the departing fourth friend who is starting a competing business, which could potentially raise further legal concerns such as confidentiality and non-compete clauses.

Bad Math And Lack Of Common Sense

ChatGPT gives answers that no one would give. It gives answers that are wrong because of bad logic or bad assumptions. Below it seems to illustrate both. Although the question is admittedly a bit vague, ChatGPT's answer wouldn't be right whether the question is asking how many people were on the island originally or at the 12 month point, and it assumes that there's no other change in the population. Notice how it concludes that one of the people gave birth at the five month point, when that can't actually be inferred.

Complex Wrong Answers

ChatGPT aggressively pattern matches to provide answers. This is why it's so good at standardized tests that follow certain formats. But this same tendency can cause it to provide answers to non-sensical questions that would cause a human to immediately stop in their tracks. Below, ChatGPT makes a number of assumptions that lead to an answer that looks great but is meaningless because the question can't be answered. The question lacks a key fact: the ratio of good to bad dolphins. But ChatGPT powers past that and makes up some numbers. This isn't a law question, but it shows the potential for generating complex answers that are completely wrong but look right.

Question: A shining light on a hill rotates at a speed that allows it to make a full rotation every five minutes. Every full sweep of the light that passes over the harbour below has a chance of illuminating a dolphin. Assume that the chance of a dolphin being present in the harbour is 1/50 per hour, and a dolphin has a 20% chance of being illuminated with each sweep of the light. What can we say about the number of good or bad dolphins present in the harbour in a one hour period? Provide the calculations for good and bad dolphins separately.

Let's start by calculating the probability of a dolphin being illuminated during a single rotation of the light. Since the light makes a full rotation every five minutes, it completes 12 full rotations per hour. Therefore, the probability of a dolphin being illuminated during a single rotation is:

P(illuminated during one rotation) = 0.2 * (1/50) = 0.004

Now, let's consider the number of dolphins in the harbor. Since the probability of a dolphin being present in the harbor is 1/50 per hour, we can model the number of dolphins in the harbor as a Poisson distribution with a mean of λ = 1/50. The Poisson distribution models the number of occurrences of a rare event in a fixed interval of time, which is appropriate for this scenario.

To calculate the probability of a certain number of dolphins being illuminated during a one-hour period, we can use the Poisson distribution formula:

P(X = k) = (e^-λ * λ^k) / k!

where X is the number of dolphins illuminated during a one-hour period, k is the number of dolphins, e is the mathematical constant e (approximately 2.71828), and k! is the factorial of k.

Let's start with the probability of zero dolphins being illuminated (i.e., bad dolphins). Plugging in λ = 1/50 and k = 0, we get:

P(bad dolphins) = P(X = 0) = (e^-λ * λ^0) / 0! = e^(-1/50) ≈ 0.9802

Therefore, the probability of no dolphins being illuminated during a one-hour period is approximately 0.9802.

Next, let's calculate the probability of one or more dolphins being illuminated (i.e., good dolphins). We can use the complement rule to find this probability:

P(good dolphins) = 1 - P(bad dolphins) ≈ 1 - 0.9802 ≈ 0.0198

Therefore, the probability of one or more dolphins being illuminated during a one-hour period is approximately 0.0198.

To find the expected number of good and bad dolphins, we can use the mean of the Poisson distribution, which is also λ. Therefore, the expected number of bad dolphins is:

E(bad dolphins) = λ = 1/50 ≈ 0.02

And the expected number of good dolphins is:

E(good dolphins) = 1 - e^(-λ) ≈ 1 - 0.9802 ≈ 0.0198

Therefore, on average, we would expect to see approximately 0.02 bad dolphins and 0.0198 good dolphins during a one-hour period.

Bad Advice: A Rabbit Gets Eaten

ChatGPT's pattern matching results in giving answers that a person wouldn't give. In the example below, the computer answer is that the hare should bet on itself, but doing that means that it is entering a race in which it has a 50% chance of dying. ChatGPT's answer doesn't properly take into account the consequences of the possibilities. This question is a bit unfair, and doesn't seem like a legal question, but real life legal problems often include complex fact patterns with different possibilities that depend on an accurate model of consequences (which ChatGPT doesn't have).

Question: A lion asks a rabbit if it would like to have a race. The rabbit declines on the grounds that the lion might eat it during the race. As the race is about to begin, the lion eats a different herbivore. The rabbit, reacting quite rightly, threatens to pull out of the race. The race management tells the rabbit there's no way out because they've already taken bets on the race and it's too late. It's race or else. If the race odds are 20:1 in favour of the rabbit, and the chance of being eaten is 50%, how much should the rabbit bet on the race?

This is a tricky problem as it involves probability and risk assessment. We can approach it by calculating the expected value of the race for the rabbit and using that to determine how much it should bet.

Let's start by calculating the expected value of winning the race, taking into account the risk of being eaten. The probability of winning the race is 20/21 (since the odds are 20:1 in favor of the rabbit), while the probability of being eaten is 1/2. Therefore, the expected value of winning the race is:

(20/21) x (1 - 1/2) = 10/21

This means that for every $1 the rabbit bets, it can expect to win back $10/21 if it wins the race.

Now let's consider the cost of not racing. If the rabbit withdraws from the race, it loses the opportunity to win the expected value of 10/21, but it also avoids the risk of being eaten. So the cost of not racing is:

0 x (1/2) + (-10/21) x (1/2) = -5/21

This means that if the rabbit doesn't race, it can expect to lose $5/21 on average.

Given these calculations, we can see that the expected value of racing is higher than the expected value of not racing. Therefore, it makes sense for the rabbit to race. As for how much it should bet, that depends on the rabbit's risk tolerance and its available resources. A general rule of thumb for managing risk is to only bet what you can afford to lose, so the rabbit should only bet an amount that it can bear to lose in the event that it doesn't win. Without knowing more about the rabbit's financial situation and risk preferences, it's hard to give a specific recommendation on how much to bet.

Illogical Problem Solving

The reasoning abilities of large language models are still more constrained than most people think. The example below shows ChatGPT making incorrect assumptions and giving an answer that is confidently wrong. It's unable to separate out what's important and what isn't, producing complex answers that are wrong. The answer to the question below is 5km because that's how long the road is, so 5km is the furthest that anyone can walk down the road. They could easily get to the end in the time provided.

Four friends go for a walk down a road. Along the road they stop to visit with other friends. Sometimes they visit on their own, and other times in groups of three friends, but never all four friends together during any one visit. The road is 5km. If a visit takes no more than five minutes then what is the furthest down the road that any friend can walk down the road? Assume each person walks at a rate of 4km per hour and there is a three hour time limit.

Let's consider the scenario where the four friends walk together for the entire 5km. They would cover this distance at a speed of 4km per hour, so it would take them 5/4 = 1.25 hours, or 75 minutes.

Now, since they have a three hour time limit, they have a total of 180 minutes to spend on visiting with friends. Let's assume that they spend x minutes visiting on their own and y minutes visiting in groups of three.

Then, the total time spent visiting can be expressed as:

x + 3y = 180

We want to find the furthest down the road that any friend can walk, so let's assume that one friend walks the entire 5km on their own, while the other three friends are visiting. This friend can walk at a speed of 4km per hour for a maximum of 75 minutes, or 5/4 hours. Therefore, the furthest this friend can walk down the road is:

distance = speed x time = 4 x (5/4) = 5km

Now, let's consider the other three friends. They are visiting in groups of three, so there are a total of 4 possible groups of three friends that can visit together. If each group of three friends visits for y minutes, then the total time spent visiting in groups of three is:

4y

Since each visit takes no more than 5 minutes, we know that y must be less than or equal to 5. Therefore, the total time spent visiting can be expressed as:

x + 4y ≤ 180

Now, we want to find the furthest down the road that the other three friends can walk while their fourth friend walks the entire 5km. We can assume that each of these friends walks at a speed of 4km per hour for a maximum of (180 - x - 4y)/3 minutes. Therefore, the furthest these friends can walk down the road is:

distance = speed x time = 4 x (180 - x - 4y)/3

We want to maximize this distance, subject to the constraints we have already identified. We can use the method of Lagrange multipliers to solve this optimization problem.

Let L be the Lagrangian function defined as:

L = 4(180 - x - 4y)/3 + λ(x + 4y - 180)

where λ is the Lagrange multiplier. We want to find the values of x, y, and λ that maximize L, subject to the constraints:

x + 3y = 180

x + 4y ≤ 180

0 ≤ x ≤ 180

0 ≤ y ≤ 5

Taking the partial derivatives of L with respect to x, y, and λ, and setting them equal to zero, we get:

dL/dx = -4/3 + λ = 0

dL/dy = -16/3 + 4λ = 0

dL/dλ = x + 4y - 180 = 0

Solving these equations, we get:

λ = 4/9

x = 180 - 4y/3

y = 45/4

Since y must be less than or equal to 5, we can take y = 5, which gives us:

x = 165/4

λ = 4/9

Therefore, the furthest down the road that each of the three friends can walk, while their fourth friend walks the entire 5km, is:

distance = 4(180 - x - 4y)/3 = 4(180 - 165/4 - 4(5))/3

Note: The answer cuts off at the point above. There's a limit to how long ChatGPT answers can be. With ChatGPT, you can regenerate an answer to give it a second shot. Regenerating this answer provided an even more incorrect answer, but at least it's less complicated. It incorrectly states that any of the friends could walk 16.67km, but how could they walk 16.67km down a 5km road? A person wouldn't answer the question in this manner.

Let's call the four friends A, B, C, and D.

We can start by assuming that each of them walks the maximum distance possible, without making any visits. Therefore, each of them walks 5 km in 1 hour (since they walk at a rate of 4 km/h). After one hour, they all meet at a point 5 km down the road.

From here, we can start adding in the visits. Let's say that A, B, and C visit someone for five minutes. During this time, D walks as far down the road as possible, without going past the 3 hour time limit.

Since D has already walked 5 km, and there are still 2 hours and 55 minutes left, D can walk an additional 11 km (since 4 km/h x 2.92 h = 11.68 km). Therefore, the furthest down the road that D can walk is 16 km (5 km + 11 km).

Next, let's say that A and B visit someone else for five minutes, while C and D continue walking down the road. Again, we want to find the furthest distance that C and D can walk without going past the 3 hour time limit.

Since C and D have already walked 5 km, and there are still 2 hours and 50 minutes left, they can walk an additional 11.33 km (since 4 km/h x 2.83 h = 11.33 km). Therefore, the furthest down the road that C and D can walk is 16.33 km (5 km + 11.33 km).

Finally, let's say that A, B, and C visit someone else for five minutes, while D continues walking down the road. Again, we want to find the furthest distance that D can walk without going past the 3 hour time limit.

Since D has already walked 5 km, and there are still 2 hours and 45 minutes left, D can walk an additional 11.67 km (since 4 km/h x 2.75 h = 11.67 km). Therefore, the furthest down the road that D can walk is 16.67 km (5 km + 11.67 km).

Therefore, the furthest down the road that any friend can walk is 16.67 km

Made Up Case About Referrals

It's easy to get ChatGPT to imagine case law. It seems to mostly do that! This could be improved by training a model like ChatGPT on Canadian law, which I don't think was done. In the example below it makes up the case, gets the (imaginary) citation format wrong, and I don't think this summary is even based on a real case. The reason I doubt there's a case like this is that lawyers aren't allowed to pay referral fees to non-lawyers at all.

Lawyers Are Safe For Now

Five years ago, the answers above would have seemed impossibly good for a machine learning model. Maybe five years from now they'll be much better, or perhaps this technology will plateau. But for the time being, lawyers' jobs are not in danger from ChatGPT.

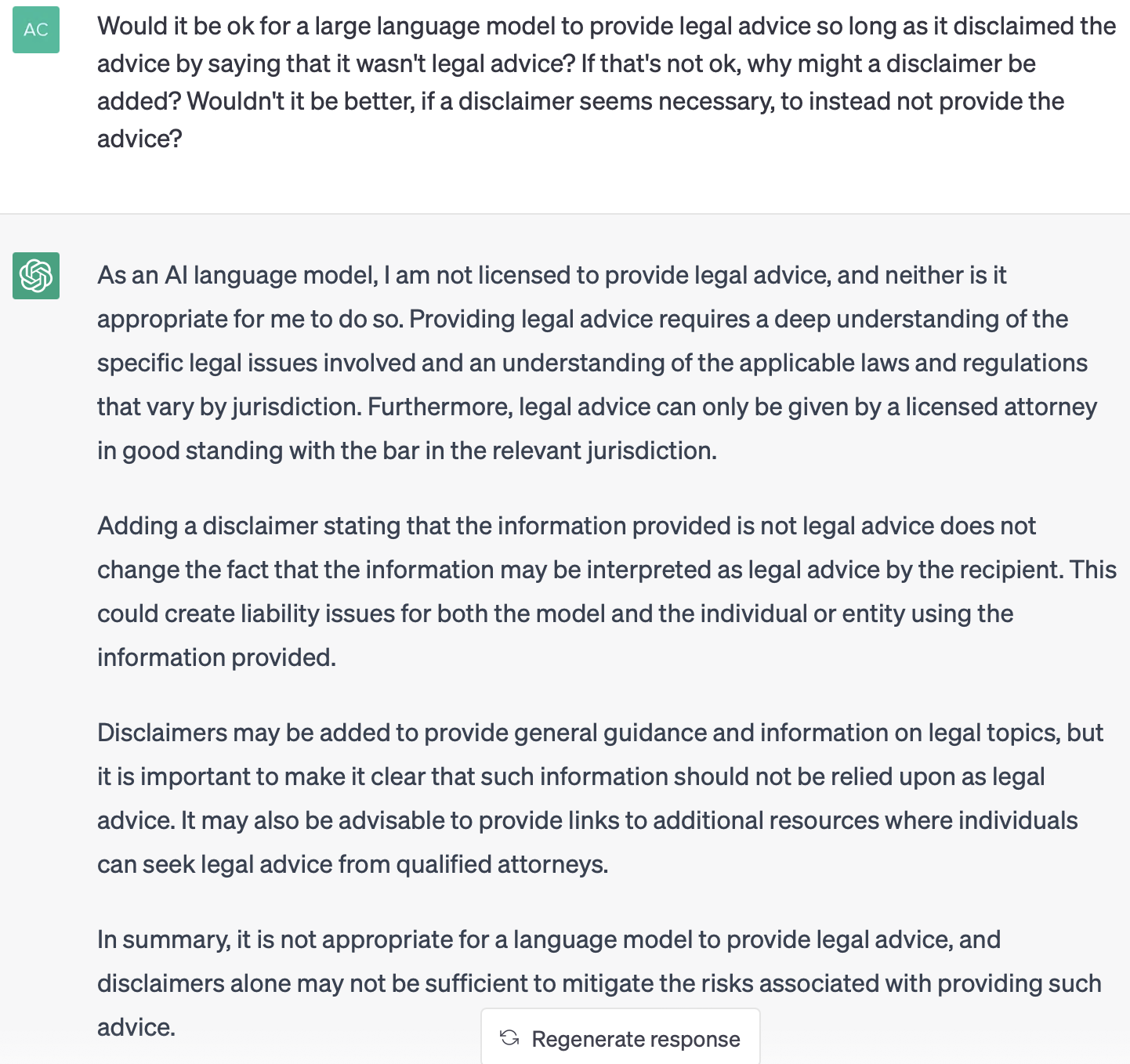

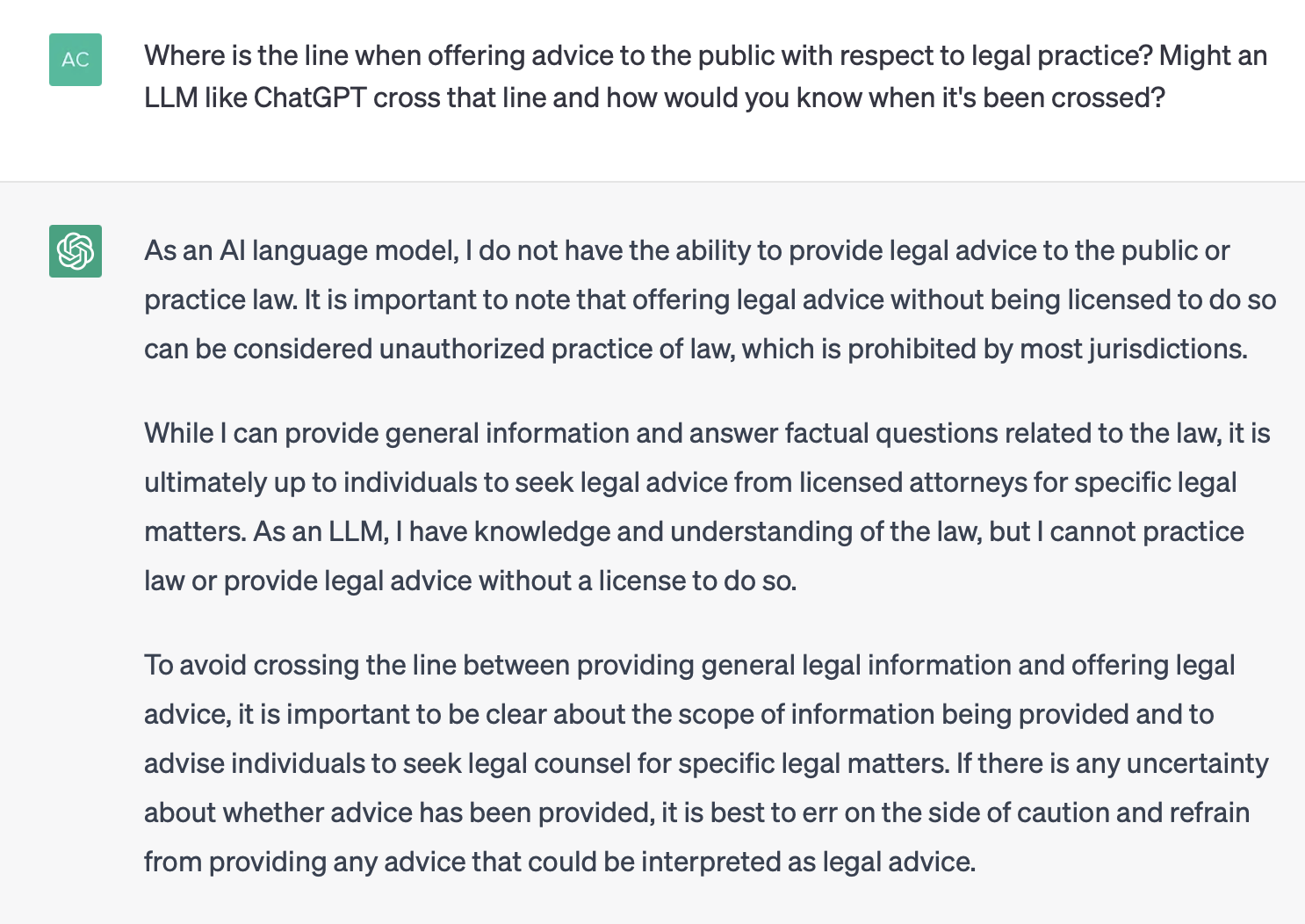

But is ChatGPT safe from lawyers? ChatGPT's answers often disclaim "I am not a lawyer" but prefacing a legal answer with "I am not a lawyer" is not a get out of jail free card. If it's legal advice, it's prohibited, since computer programs aren't licensed to practice law in Ontario (maybe in a few years?). ChatGPT knows this too.

So although ChatGPT isn't threatening many lawyers, perhaps lawyers may end up threatening it. ChatGPT knows this too.

When it comes to what best serves the public, the ChatGPT answer is that it's better to have no lawyer than one that's potentially unreliable.